17. Doing More With Your GAN

Doing More with Your GAN

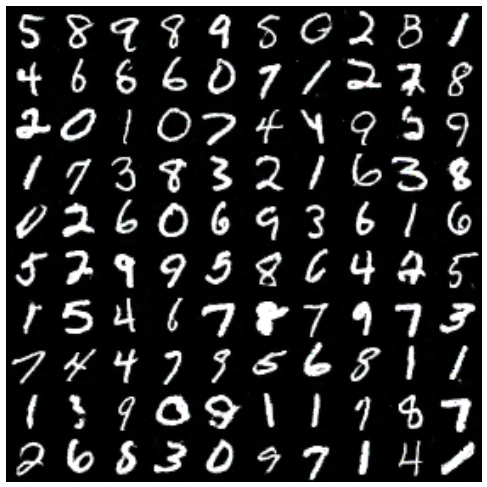

The GAN I showed you used only one hidden layer in the generator and discriminator. The results of this GAN were pretty impressive, but there were still a lot of noisy images and images that didn't really look like numbers. But, it's completely possible to have the generator make images indistinguishable from the MNIST dataset.

MNIST images generated from a trained GAN (https://arxiv.org/pdf/1606.03498.pdf)

This is from a paper called Improved Techniques for Training GANs. So, how did they make such nice looking images?

As with most neural networks, using more layers will improve performance. Instead of just one layer, try two or three. Try larger layers with more units. See how that affects your results.

Batch Normalization

Just as a heads up, you probably won't get it to work with three layers. The networks become really sensitive to the initial values of the weights and will fail to train. We can solve this problem using batch normalization. The idea is pretty simple. Just like what we do with the inputs to our networks, we can normalize the inputs to each layer. That is, scale the layer inputs to have zero mean and standard deviations of 1. It's been found that batch normalization is necessary to make deep GANs.

We'll cover batch normalization in next week's lesson, as well as using convolutional networks for the generator and discriminator.